LM Studio

About LM Studio

LM Studio empowers users to run local LLMs, ensuring privacy and offline access to powerful models like Llama and Mistral. Its innovative Chat UI facilitates interaction with documents and models, making it ideal for developers and creatives wanting to harness AI effectively without compromising data security.

LM Studio offers a free plan for personal use, with options for businesses needing tailored solutions. Users can upgrade for enhanced features, such as expanded model support and dedicated assistance, ensuring they get the most out of their local LLM experience.

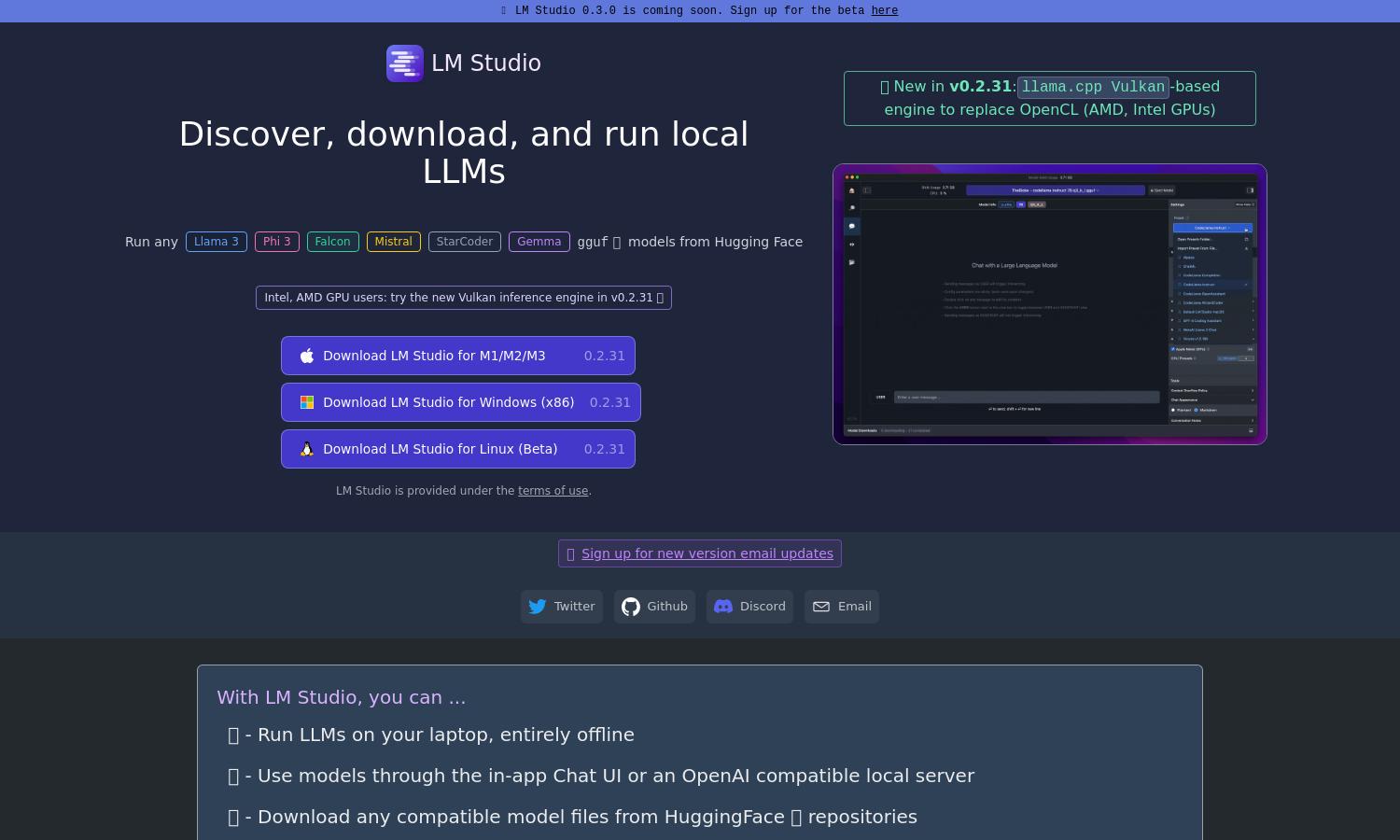

LM Studio features a user-friendly interface designed for seamless navigation through its powerful tools. The layout prioritizes accessibility and efficiency, allowing users to easily explore models and utilize in-app chat functionalities, making the experience enjoyable and productive.

How LM Studio works

Users start by downloading LM Studio and installing it on compatible devices, such as M1/M2/M3 Macs or AVX2 supporting PCs. After onboarding, they can easily navigate through the Discover page to find models, utilize the in-app Chat UI to interact with documents, and offline run large language models without internet dependency.

Key Features for LM Studio

Offline Operation

With LM Studio, users can operate powerful LLMs entirely offline, ensuring data privacy and security. This unique feature allows for deep interaction with models like Llama and Mistral while users enjoy uninterrupted access without needing an internet connection, emphasizing the platform's commitment to privacy.

In-app Chat UI

LM Studio’s in-app Chat UI provides an intuitive way for users to engage with their local documents and models. This unique feature enhances productivity by facilitating seamless interactions with AI, making it easier for users to utilize their LLMs in real-time for various applications.

Model Compatibility

LM Studio is compatible with a wide range of large language models, including Llama, Mistral, and Gemma. This broad support enables users to download and implement various models into their workflows, enhancing versatility and ensuring that users can choose the best LLM for their needs.